7D twisting and tagging

I've been thinking about the terms we should use to machine tag our 7d tiltometer metadata.

The terms:

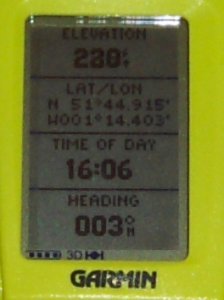

1. Altitude

2. Latitude

3. Longitude

4. Compass bearing

5. Tilt

6. Orientation

7. Timestamp

Some semantics:

By tilt, we mean rotation around X axis - or, are we pointing the camera at our feet?

By orientation, we mean rotation around Z axis - or, are we doing a wacky half-portrait half-landscape shot?

By compass bearing, we mean rotation around Y axis. North, East, North-North-East. Oh, you know what a compass looks like..

OK, I've got two aims:

A. Use the semantically correct term.

B. Use whatever everyone else is using - accept existing data.

Since we're hoping to make use of user-contributed photo metadata other than that we've cooked up ourselves, B. is really important to us.

altitude, latitude, longitude -> geo:

Ok, these first 3 are a no-brainer on both criteria: geo: is the preferred ns prefix for the swig Basic Geo (WGS84 lat/long) Vocabulary. And, thousands of taggers already voted with their fingers:

compass bearing, tilt -> ge:

Google Earth / KML / FlickrFly offer ge:tilt (meaning the same as we do), and ge:heading (meaning compass bearing).

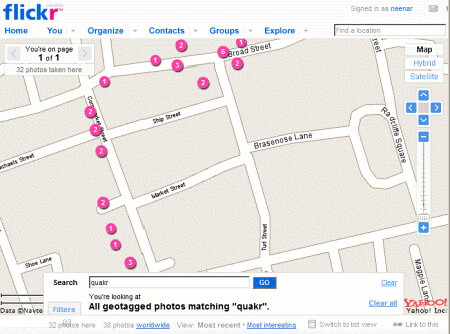

The use of 'ge' seems a bit unsatisfactory to me. 'G'oogle 'E'arth describes the application, not the data. But ho hum, 9268 people don't agree:

, so that's a decider for me.

We are also stuck with the problem that FlickrFly have been recommending 'head', while the kml vocab defines 'heading'. 'Head' is the statistical winner. But perhaps we should double-tag and use both?

Orientation -> exif ?

ge:rotation doesn't quite capture our meaning. Strictly, exif:orientation's value should be restricted to 4 possible positions. But, it does have that usability factor - it's meaning is understandable.. so I think we'll cheat and use it anyway for now.

timestamp -> dc: / exif: ?

This is a tricky one. What do people actually do? Well.. mainly nobody tags this at all - this data belongs in the EXIF pile that your camera puts there for free.

Given that.. I'm tempted to go with exif on the basis that it's more familiar to amateur photographers, and thus easier to type in the box. (and persuade others to type in the box..?)

Really, we're trying to squeeze an exif-shaped datum into a tag-shaped hole on this one. Perhaps we should be querying the exif data instead?..

Provisional quakr set:

1.geo:alt

2.geo:lat

3.geo:long

4.ge:heading

5.ge:tilt

6.exif:orientation

7.exif:dateTime